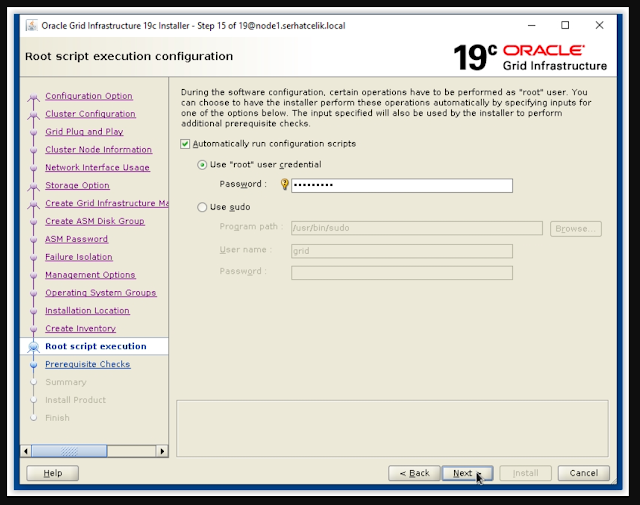

Installation on Oracle Linux Part-1 OS

It is assumed that you have two servers running on Oracle Linux

USED IP AND HOSTNAMES

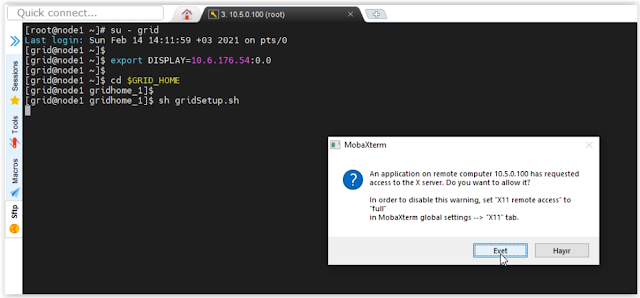

About SCAN

Ping:-

https://community.oracle.com/tech/apps-infra/discussion/2394322/scan-ip-is-not-reachable

Note:-

Your scan will be not pingable from node1 and node2 even after adding the SCAN

entries in DNS it will invoke only after RAC installation, but prior to

installation we need to confirm that whether my SCAN is working or not by doing

the below steps, after every nslookup the IP’s order will be changed with that

we can confirm that my SCAN is working.

Public IP: The public IP address is for the server. This is the same as any server IP address, a unique address with exists in /etc/hosts.

Private IP: Oracle RCA requires “private IP” addresses to manage the CRS, the clusterware heartbeat process and the cache fusion layer.

Virtual IP: Oracle uses a Virtual IP (VIP) for database access. The VIP must be on the same subnet as the public IP address. The VIP is used for RAC failover (TAF).

SET NODE1 PUBLIC IP

IP Address : 10.5.0.100

Subnet : 255.255.0.0

Gateway : 10.5.XX

[root@node1 ~]# more /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=no

DEFROUTE= yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes IPV6_DEFROUTE =

yes IPV6_FAILURE_FATAL =

no

IPV6_ADDR_GEN_MODE=stable-privacy NAME=eth0 UUID=yes IPV6_DEFROUTE=yes IPV6_ADDR_GEN_MODE=stable-privacy NAME =

eth0 UUID= 47592082

-bffe-45cf . =255.255.0.0 GATEWAY=10.5.XX DOMAIN= xxx.com.tr DNS1=10.5.XX DNS2=10.5.XX

[root@node1 ~]#

SET NODE2 PUBLIC IP

IP Address : 10.5.0.101

Subnet : 255.255.0.0

Gateway : 10.5.XX

[root@node2 ~]# more /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=no

DEFROUTE= yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes IPV6_DEFROUTE =

yes IPV6_FAILURE_FATAL =

no

IPV6_ADDR_GEN_MODE=stable-privacy NAME=eth0 UUID=yes IPV6_DEFROUTE=yes IPV6_ADDR_GEN_MODE=stable-privacy NAME =

eth0 UUID= 47592082

-bffe-45cf . =255.255.0.0 GATEWAY=10.5.XX DOMAIN= xxx.com.tr DNS1=10.5.XX DNS2=10.5.XX

[root@node2 ~]#

CONFIGURATION OF SECOND NETWORK INTERFACE FOR INTERCONNECT CONNECTIONS

You should use different network interface for private IP apart from public IP. Also IP subnets should be different from each other otherwise installation can not be continued. (It is prerequisite.) I added second network interface for both VM servers running on Oracle VM Server.

SET NODE1 PRIVATE IP

IP Address: 192.168.1.120

Subnet: 255.255.0.0

[root@node1 ~]# more /etc/sysconfig/network-scripts/ifcfg-eth1

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6INIT= yes

IPV_ILye_AUTOF6F IPV6_ADDR_GEN_MODE=stable-privacy NAME=eth1 DEVICE=eth1 ONBOOT=yes NM_CONTROLLED=no IPADDR=192.168.1.120 NETMASK=255.255.0.0 [root@node1 ~]# [root@node1 ~]# service network restart Restarting network (via systemctl) : [ OK ] [root@node1 ~]#

SET NODE2 PRIVATE IP

IP Address: 192.168.1.121

Subnet: 255.255.0.0

[root@node2 ~]# more /etc/sysconfig/network-scripts/ifcfg-eth1

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6INIT= yes

IPV_IDE_AUTOF6F IPV6_ADDR_GEN_MODE=stable-privacy NAME=eth1 DEVICE=eth1 ONBOOT=yes NM_CONTROLLED=no IPADDR=192.168.1.121 NETMASK=255.255.0.0 [root@node2 ~]# [root@node2 ~]# service network restart Restarting network (via systemctl) : [ OK ] [root@node2 ~]#

👉DISABLE SELINUX ON NODE1 AND NODE2

[root@node1 ~]# systemctl status avahi-daemon

? avahi-daemon.service - Avahi mDNS/DNS-SD Stack

Loaded: loaded (/usr/lib/systemd/system/avahi-daemon.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2022-02-16 17:35:25 CET; 1 day 17h ago

Main PID: 1454 (avahi-daemon)

Status: "avahi-daemon 0.7 starting up."

Tasks: 2 (limit: 1607258)

Memory: 2.0M

CGroup: /system.slice/avahi-daemon.service

+-1454 avahi-daemon: running [node1.local]

+-1491 avahi-daemon: chroot helper

Feb 16 17:43:19 node1 avahi-daemon[1454]: Registering new address record for 172.20.1.26 on eth0.IPv4.

Feb 16 17:43:20 node1 avahi-daemon[1454]: Joining mDNS multicast group on interface eth0.IPv6 with address fe80::221:f6ff:fecb:2871.

Feb 16 17:43:20 node1 avahi-daemon[1454]: New relevant interface eth0.IPv6 for mDNS.

Feb 16 17:43:20 node1 avahi-daemon[1454]: Registering new address record for fe80::221:f6ff:fecb:2871 on eth0.*.

Feb 16 17:45:02 node1 avahi-daemon[1454]: Joining mDNS multicast group on interface eth1.IPv4 with address 192.168.128.3.

Feb 16 17:45:02 node1 avahi-daemon[1454]: New relevant interface eth1.IPv4 for mDNS.

Feb 16 17:45:02 node1 avahi-daemon[1454]: Registering new address record for 192.168.128.3 on eth1.IPv4.

Feb 16 17:45:04 node1 avahi-daemon[1454]: Joining mDNS multicast group on interface eth1.IPv6 with address fe80::221:f6ff:feeb:840d.

Feb 16 17:45:04 node1 avahi-daemon[1454]: New relevant interface eth1.IPv6 for mDNS.

Feb 16 17:45:04 node1 avahi-daemon[1454]: Registering new address record for fe80::221:f6ff:feeb:840d on eth1.*.

[root@node1 ~]#

[root@node1 ~]#

[root@node1 ~]#

[root@node1 ~]# systemctl stop avahi-daemon

Warning: Stopping avahi-daemon.service, but it can still be activated by:

avahi-daemon.socket

[root@node1 ~]#

[root@node1 ~]#

[root@node1 ~]# systemctl disable avahi-daemon

Removed /etc/systemd/system/multi-user.target.wants/avahi-daemon.service.

Removed /etc/systemd/system/sockets.target.wants/avahi-daemon.socket.

Removed /etc/systemd/system/dbus-org.freedesktop.Avahi.service.

[root@node1 ~]#

[root@node1 ~]#

[root@node1 ~]# systemctl status avahi-daemon

? avahi-daemon.service - Avahi mDNS/DNS-SD Stack

Loaded: loaded (/usr/lib/systemd/system/avahi-daemon.service; disabled; vendor preset: enabled)

Active: inactive (dead) since Fri 2022-02-18 10:36:04 CET; 1min 35s ago

Main PID: 1454 (code=exited, status=0/SUCCESS)

Status: "avahi-daemon 0.7 starting up."

Feb 18 10:36:04 node1 systemd[1]: Stopping Avahi mDNS/DNS-SD Stack...

Feb 18 10:36:04 node1 avahi-daemon[1454]: Got SIGTERM, quitting.

Feb 18 10:36:04 node1 avahi-daemon[1454]: Leaving mDNS multicast group on interface virbr0.IPv4 with address 192.168.122.1.

Feb 18 10:36:04 node1 avahi-daemon[1454]: Leaving mDNS multicast group on interface eth1.IPv6 with address fe80::221:f6ff:feeb:840d.

Feb 18 10:36:04 node1 avahi-daemon[1454]: Leaving mDNS multicast group on interface eth1.IPv4 with address 192.168.128.3.

Feb 18 10:36:04 node1 avahi-daemon[1454]: Leaving mDNS multicast group on interface eth0.IPv6 with address fe80::221:f6ff:fecb:2871.

Feb 18 10:36:04 node1 avahi-daemon[1454]: Leaving mDNS multicast group on interface eth0.IPv4 with address 172.20.1.26.

Feb 18 10:36:04 node1 avahi-daemon[1454]: avahi-daemon 0.7 exiting.

Feb 18 10:36:04 node1 systemd[1]: avahi-daemon.service: Succeeded.

Feb 18 10:36:04 node1 systemd[1]: Stopped Avahi mDNS/DNS-SD Stack.

[root@node1 ~]# systemctl stop avahi-daemon

Warning: Stopping avahi-daemon.service, but it can still be activated by:

avahi-daemon.socket

[root@node1 ~]#

[root@node1 ~]#

[root@node1 ~]# systemctl disable avahi-daemon

[root@node1 ~]#

[root@node1 ~]#

[root@node1 ~]# systemctl status avahi-daemon

? avahi-daemon.service - Avahi mDNS/DNS-SD Stack

Loaded: loaded (/usr/lib/systemd/system/avahi-daemon.service; disabled; vendor preset: enabled)

Active: inactive (dead) since Fri 2022-02-18 10:36:04 CET; 2min 20s ago

Main PID: 1454 (code=exited, status=0/SUCCESS)

Status: "avahi-daemon 0.7 starting up."

Feb 18 10:36:04 node1 systemd[1]: Stopping Avahi mDNS/DNS-SD Stack...

Feb 18 10:36:04 node1 avahi-daemon[1454]: Got SIGTERM, quitting.

Feb 18 10:36:04 node1 avahi-daemon[1454]: Leaving mDNS multicast group on interface virbr0.IPv4 with address 192.168.122.1.

Feb 18 10:36:04 node1 avahi-daemon[1454]: Leaving mDNS multicast group on interface eth1.IPv6 with address fe80::221:f6ff:feeb:840d.

Feb 18 10:36:04 node1 avahi-daemon[1454]: Leaving mDNS multicast group on interface eth1.IPv4 with address 192.168.128.3.

Feb 18 10:36:04 node1 avahi-daemon[1454]: Leaving mDNS multicast group on interface eth0.IPv6 with address fe80::221:f6ff:fecb:2871.

Feb 18 10:36:04 node1 avahi-daemon[1454]: Leaving mDNS multicast group on interface eth0.IPv4 with address 172.20.1.26.

Feb 18 10:36:04 node1 avahi-daemon[1454]: avahi-daemon 0.7 exiting.

Feb 18 10:36:04 node1 systemd[1]: avahi-daemon.service: Succeeded.

Feb 18 10:36:04 node1 systemd[1]: Stopped Avahi mDNS/DNS-SD Stack.

[root@node1 ~]#

[root@node2 ~]# systemctl status avahi-daemon

? avahi-daemon.service - Avahi mDNS/DNS-SD Stack

Loaded: loaded (/usr/lib/systemd/system/avahi-daemon.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2022-02-16 17:29:15 CET; 1 day 17h ago

Main PID: 1445 (avahi-daemon)

Status: "avahi-daemon 0.7 starting up."

Tasks: 2 (limit: 1607258)

Memory: 3.0M

CGroup: /system.slice/avahi-daemon.service

+-1445 avahi-daemon: running [node2.local]

+-1489 avahi-daemon: chroot helper

Feb 16 17:29:15 node2 avahi-daemon[1445]: Registering new address record for 192.168.128.4 on eth1.IPv4.

Feb 16 17:29:16 node2 avahi-daemon[1445]: Joining mDNS multicast group on interface virbr0.IPv4 with address 192.168.122.1.

Feb 16 17:29:16 node2 avahi-daemon[1445]: New relevant interface virbr0.IPv4 for mDNS.

Feb 16 17:29:16 node2 avahi-daemon[1445]: Registering new address record for 192.168.122.1 on virbr0.IPv4.

Feb 16 17:29:16 node2 avahi-daemon[1445]: Joining mDNS multicast group on interface eth0.IPv6 with address fe80::221:f6ff:fe96:60b4.

Feb 16 17:29:16 node2 avahi-daemon[1445]: New relevant interface eth0.IPv6 for mDNS.

Feb 16 17:29:16 node2 avahi-daemon[1445]: Registering new address record for fe80::221:f6ff:fe96:60b4 on eth0.*.

Feb 16 17:29:17 node2 avahi-daemon[1445]: Joining mDNS multicast group on interface eth1.IPv6 with address fe80::221:f6ff:fee6:8bf0.

Feb 16 17:29:17 node2 avahi-daemon[1445]: New relevant interface eth1.IPv6 for mDNS.

Feb 16 17:29:17 node2 avahi-daemon[1445]: Registering new address record for fe80::221:f6ff:fee6:8bf0 on eth1.*.

[root@node2 ~]#

[root@node2 ~]#

[root@node2 ~]#

[root@node2 ~]# systemctl stop avahi-daemon

Warning: Stopping avahi-daemon.service, but it can still be activated by:

avahi-daemon.socket

[root@node2 ~]# systemctl disable avahi-daemon

Removed /etc/systemd/system/multi-user.target.wants/avahi-daemon.service.

Removed /etc/systemd/system/sockets.target.wants/avahi-daemon.socket.

Removed /etc/systemd/system/dbus-org.freedesktop.Avahi.service.

[root@node2 ~]#

[root@node2 ~]#

[root@node2 ~]#

[root@node2 ~]# systemctl status avahi-daemon

? avahi-daemon.service - Avahi mDNS/DNS-SD Stack

Loaded: loaded (/usr/lib/systemd/system/avahi-daemon.service; disabled; vendor preset: enabled)

Active: inactive (dead) since Fri 2022-02-18 10:41:35 CET; 42s ago

Main PID: 1445 (code=exited, status=0/SUCCESS)

Status: "avahi-daemon 0.7 starting up."

Feb 18 10:41:35 node2 systemd[1]: Stopping Avahi mDNS/DNS-SD Stack...

Feb 18 10:41:35 node2 avahi-daemon[1445]: Got SIGTERM, quitting.

Feb 18 10:41:35 node2 avahi-daemon[1445]: Leaving mDNS multicast group on interface virbr0.IPv4 with address 192.168.122.1.

Feb 18 10:41:35 node2 avahi-daemon[1445]: Leaving mDNS multicast group on interface eth1.IPv6 with address fe80::221:f6ff:fee6:8bf0.

Feb 18 10:41:35 node2 avahi-daemon[1445]: Leaving mDNS multicast group on interface eth1.IPv4 with address 192.168.128.4.

Feb 18 10:41:35 node2 avahi-daemon[1445]: Leaving mDNS multicast group on interface eth0.IPv6 with address fe80::221:f6ff:fe96:60b4.

Feb 18 10:41:35 node2 avahi-daemon[1445]: Leaving mDNS multicast group on interface eth0.IPv4 with address 172.20.1.27.

Feb 18 10:41:35 node2 avahi-daemon[1445]: avahi-daemon 0.7 exiting.

Feb 18 10:41:35 node2 systemd[1]: avahi-daemon.service: Succeeded.

Feb 18 10:41:35 node2 systemd[1]: Stopped Avahi mDNS/DNS-SD Stack.

[root@node2 ~]#

[root@node1 ~]#

[root@node1 ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/ multi-user.target.wants/firewalld.service .

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@node1 ~]#

[root@node1 ~]# systemctl stop NetworkManager

[root@node1 ~]#

[root@node1 ~]# systemctl disable NetworkManager

Removed symlink /etc/systemd/system/ dbus-org.freedesktop.nm -dispatcher.service .

Removed symlink /etc/systemd/system/ multi-user.target.wants/NetworkManager.service .

Removed symlink /etc/systemd/system/network-online.target.wants/NetworkManager-wait-online.service .

[root@node1 ~]#

[root@node2 ~]# systemctl stop firewalld.service

[root@node2 ~]#

[root@node2 ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/ multi-user.target .wants/firewalld.service .

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@node2 ~]#

[root@node2 ~]# systemctl stop NetworkManager

[root@node2 ~]#

[root@node2 ~]# systemctl disable NetworkManager

Removed symlink /etc/systemd/system/ dbus-org.freedesktop.nm -dispatcher.service .

Removed symlink /etc/systemd/system/multi-user.target.wants/NetworkManager.service .

Removed symlink /etc/systemd/system/ network-online.target.wants/NetworkManager-wait-online.service .

[root@node2 ~]#

INSTALLATION OF NEEDED PACKAGES ON NODE1 AND NODE2

Kernel parameter values: - Before running Package

[root@node1 ~]# cat /etc/sysctl.conf

# sysctl settings are defined through files in

# /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/.

#

# Vendors settings live in /usr/lib/sysctl.d/.

# To override a whole file, create a new file with the same in

# /etc/sysctl.d/ and put new settings there. To override

# only specific settings, add a file with a lexically later

# name in /etc/sysctl.d/ and put new settings there.

#

# For more information, see sysctl.conf(5) and sysctl.d(5).

[root@node2 ~]# cat /etc/sysctl.conf

# sysctl settings are defined through files in

# /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/.

#

# Vendors settings live in /usr/lib/sysctl.d/.

# To override a whole file, create a new file with the same in

# /etc/sysctl.d/ and put new settings there. To override

# only specific settings, add a file with a lexically later

# name in /etc/sysctl.d/ and put new settings there.

#

# For more information, see sysctl.conf(5) and sysctl.d(5).

Run the below command to install packages

[root@node1 ~]# dnf install oracle-database-preinstall-19c

[root@node2 ~]# dnf install oracle-database-preinstall-19c

[root@node2 ~]# dnf install oracle-database-preinstall-19c

Last metadata expiration check: 3:50:27 ago on Fri 18 Feb 2022 07:03:42 AM CET.

Dependencies resolved.

===========================================================================================================

Package Architecture Version Repository Size

===========================================================================================================

Installing:

oracle-database-preinstall-19c x86_64 1.0-2.el8 ol8_appstream 31 k

Installing dependencies:

glibc-devel x86_64 2.28-164.0.3.el8 ol8_baseos_latest 1.0 M

ksh x86_64 20120801-254.0.1.el8 ol8_appstream 927 k

libaio-devel x86_64 0.3.112-1.el8 ol8_baseos_latest 19 k

libnsl x86_64 2.28-164.0.3.el8 ol8_baseos_latest 104 k

libstdc++-devel x86_64 8.5.0-4.0.2.el8_5 ol8_appstream 2.1 M

libxcrypt-devel x86_64 4.1.1-6.el8 ol8_baseos_latest 25 k

make x86_64 1:4.2.1-10.el8 ol8_baseos_latest 498 k

Transaction Summary

==================================================================================================

Install 8 Packages

Total download size: 4.7 M

Installed size: 17 M

Is this ok [y/N]: y

Downloading Packages:

(1/8): libaio-devel-0.3.112-1.el8.x86_64.rpm 393 kB/s | 19 kB 00:00

(2/8): libxcrypt-devel-4.1.1-6.el8.x86_64.rpm 1.3 MB/s | 25 kB 00:00

(3/8): libnsl-2.28-164.0.3.el8.x86_64.rpm 1.4 MB/s | 104 kB 00:00

(4/8): glibc-devel-2.28-164.0.3.el8.x86_64.rpm 11 MB/s | 1.0 MB 00:00

(5/8): make-4.2.1-10.el8.x86_64.rpm 9.8 MB/s | 498 kB 00:00

(6/8): ksh-20120801-254.0.1.el8.x86_64.rpm 18 MB/s | 927 kB 00:00

(7/8): oracle-database-preinstall-19c-1.0-2.el8.x86_64.rpm 2.3 MB/s | 31 kB 00:00

(8/8): libstdc++-devel-8.5.0-4.0.2.el8_5.x86_64.rpm 42 MB/s | 2.1 MB 00:00

--------------------------------------------------------------------------------------------------

Total 31 MB/s | 4.7 MB 00:00

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Installing : libxcrypt-devel-4.1.1-6.el8.x86_64 1/8

Installing : glibc-devel-2.28-164.0.3.el8.x86_64 2/8

Running scriptlet: glibc-devel-2.28-164.0.3.el8.x86_64 2/8

Installing : libstdc++-devel-8.5.0-4.0.2.el8_5.x86_64 3/8

Installing : ksh-20120801-254.0.1.el8.x86_64 4/8

Running scriptlet: ksh-20120801-254.0.1.el8.x86_64 4/8

Installing : make-1:4.2.1-10.el8.x86_64 5/8

Running scriptlet: make-1:4.2.1-10.el8.x86_64 5/8

Installing : libnsl-2.28-164.0.3.el8.x86_64 6/8

Installing : libaio-devel-0.3.112-1.el8.x86_64 7/8

Running scriptlet: oracle-database-preinstall-19c-1.0-2.el8.x86_64 8/8

Installing : oracle-database-preinstall-19c-1.0-2.el8.x86_64 8/8

Running scriptlet: oracle-database-preinstall-19c-1.0-2.el8.x86_64 8/8

/sbin/ldconfig: /etc/ld.so.conf.d/kernel-5.4.17-2011.1.2.el8uek.x86_64.conf:6: hwcap directive ignored

Verifying : glibc-devel-2.28-164.0.3.el8.x86_64 1/8

Verifying : libaio-devel-0.3.112-1.el8.x86_64 2/8

Verifying : libnsl-2.28-164.0.3.el8.x86_64 3/8

Verifying : libxcrypt-devel-4.1.1-6.el8.x86_64 4/8

Verifying : make-1:4.2.1-10.el8.x86_64 5/8

Verifying : ksh-20120801-254.0.1.el8.x86_64 6/8

Verifying : libstdc++-devel-8.5.0-4.0.2.el8_5.x86_64 7/8

Verifying : oracle-database-preinstall-19c-1.0-2.el8.x86_64 8/8

Installed:

glibc-devel-2.28-164.0.3.el8.x86_64 ksh-20120801-254.0.1.el8.x86_64 libaio-devel-0.3.112-1.el8.x86_64

libnsl-2.28-164.0.3.el8.x86_64 libstdc++-devel-8.5.0-4.0.2.el8_5.x86_64 libxcrypt-devel-4.1.1-6.el8.x86_64

make-1:4.2.1-10.el8.x86_64 oracle-database-preinstall-19c-1.0-2.el8.x86_64

Complete!

Node1:- Only

half groups will be installed with this package dnf install oracle-database-preinstall-19c package.

Note2:- After installing the 19c pkg on server the sysctl value automatically appended om sysctl.conf file.

[root@node1 ~]# cat /etc/sysctl.conf

# sysctl settings are defined through files in

# /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/.

# Vendors settings live in /usr/lib/sysctl.d/.

# To override a whole file, create a new file with the same in

# /etc/sysctl.d/ and put new settings there. To override

# only specific settings, add a file with a lexically later

# name in /etc/sysctl.d/ and put new settings there.

# For more information, see sysctl.conf(5) and sysctl.d(5).

# oracle-database-preinstall-19c setting for fs.file-max is 6815744

fs.file-max = 6815744

# oracle-database-preinstall-19c setting for kernel.sem is '250 32000 100 128'

kernel.sem = 250 32000 100 128

# oracle-database-preinstall-19c setting for kernel.shmmni is 4096

kernel.shmmni = 4096

# oracle-database-preinstall-19c setting for kernel.shmall is 1073741824 on x86_64

kernel.shmall = 1073741824

# oracle-database-preinstall-19c setting for kernel.shmmax is 4398046511104 on x86_64

kernel.shmmax = 4398046511104

# oracle-database-preinstall-19c setting for kernel.panic_on_oops is 1 per Orabug 19212317

kernel.panic_on_oops = 1

# oracle-database-preinstall-19c setting for net.core.rmem_default is 262144

net.core.rmem_default = 262144

# oracle-database-preinstall-19c setting for net.core.rmem_max is 4194304

net.core.rmem_max = 4194304

# oracle-database-preinstall-19c setting for net.core.wmem_default is 262144

net.core.wmem_default = 262144

# oracle-database-preinstall-19c setting for net.core.wmem_max is 1048576

net.core.wmem_max = 1048576

# oracle-database-preinstall-19c setting for net.ipv4.conf.all.rp_filter is 2

net.ipv4.conf.all.rp_filter = 2

# oracle-database-preinstall-19c setting for net.ipv4.conf.default.rp_filter is 2

net.ipv4.conf.default.rp_filter = 2

# oracle-database-preinstall-19c setting for fs.aio-max-nr is 1048576

fs.aio-max-nr = 1048576

# oracle-database-preinstall-19c setting for net.ipv4.ip_local_port_range is 9000 65500

net.ipv4.ip_local_port_range = 9000 65500

Crosscheck KERNEL PARAMETER FILES ON NODE1 AND NODE2

[root@node1 ~]# more /etc/sysctl.conf

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

kernel.shmmni = 4096

kernel.shmall = 1073741824

kernel.shmmax = 4398046511104

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

fs.aio-max-nr = 1048576

net.ipv4.ip_local_port_range = 9000 65500

[root@node1 ~]# more /etc/security/limits.conf

grid soft nofile 1024

grid hard nofile 65536

grid soft nproc 2047

grid hard nproc 16384

grid soft stack 10240

grid hard stack 32768

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft stack 10240

oracle hard stack 32768

[root@node1 ~]#

[root@node2 ~]# more /etc/sysctl.conf

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

kernel.shmmni = 4096

kernel.shmall = 1073741824

kernel.shmmax = 4398046511104

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

fs.aio-max-nr = 1048576

net.ipv4.ip_local_port_range = 9000 65500

[root@node2 ~]#

[root@node2 ~]# more /etc/security/limits.conf

grid soft nofile 1024

grid hard nofile 65536

grid soft nproc 2047

grid hard nproc 16384

grid soft stack 10240

grid hard stack 32768

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft stack 10240

oracle hard stack 32768

Installing Bind Package:-

Last metadata expiration check: 2:27:32 ago on Mon 21 Feb 2022 10:06:42 AM CET.

Dependencies resolved.

==================================================================================================

Package Architecture Version Repository Size

==================================================================================================

Installing:

bind x86_64 32:9.11.26-6.el8 ol8_appstream 2.1 M

Transaction Summary

=================================================

Install 1 Package

Total download size: 2.1 M

Installed size: 4.5 M

Is this ok [y/N]: y

Downloading Packages:

bind-9.11.26-6.el8.x86_64.rpm 15 MB/s | 2.1 MB 00:00

-----------------------------------------------------------------------------------

Total 15 MB/s | 2.1 MB 00:00

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Running scriptlet: bind-32:9.11.26-6.el8.x86_64 1/1

Installing : bind-32:9.11.26-6.el8.x86_64 1/1

Running scriptlet: bind-32:9.11.26-6.el8.x86_64 1/1

/sbin/ldconfig: /etc/ld.so.conf.d/kernel-5.4.17-2011.1.2.el8uek.x86_64.conf:6: hwcap directive ignored

Verifying : bind-32:9.11.26-6.el8.x86_64 1/1

Installed:

bind-32:9.11.26-6.el8.x86_64

Complete!

Last metadata expiration check: 2:27:32 ago on Mon 21 Feb 2022 10:06:42 AM CET.

Dependencies resolved.

==================================================================================================

Package Architecture Version Repository Size

==================================================================================================

Installing:

bind x86_64 32:9.11.26-6.el8 ol8_appstream 2.1 M

Transaction Summary

=================================================

Install 1 Package

Total download size: 2.1 M

Installed size: 4.5 M

Is this ok [y/N]: y

Downloading Packages:

bind-9.11.26-6.el8.x86_64.rpm 15 MB/s | 2.1 MB 00:00

-----------------------------------------------------------------------------------

Total 15 MB/s | 2.1 MB 00:00

Running transaction check

Transaction check succeeded.

Running transaction test

Transaction test succeeded.

Running transaction

Preparing : 1/1

Running scriptlet: bind-32:9.11.26-6.el8.x86_64 1/1

Installing : bind-32:9.11.26-6.el8.x86_64 1/1

Running scriptlet: bind-32:9.11.26-6.el8.x86_64 1/1

/sbin/ldconfig: /etc/ld.so.conf.d/kernel-5.4.17-2011.1.2.el8uek.x86_64.conf:6: hwcap directive ignored

Verifying : bind-32:9.11.26-6.el8.x86_64 1/1

Installed:

bind-32:9.11.26-6.el8.x86_64

Complete!

👉CREATE NEEDED USERS & GROUPS ON NODE1 AND NODE2

# groupadd -g 54421 oinstall

# groupadd -g 54322 dba

# groupadd -g 54323 oper

# groupadd -g 54324 backupdba

# groupadd -g 54325 dgdba

# groupadd -g 54326 kmdba

# groupadd -g 54327 asmdba

# groupadd -g 54328 asmoper

# groupadd -g 54329 asmadmin

# groupadd -g 54330 racdba

CREATE AND GIVE NEEDED PERMISSIONS FOR DIRECTORIES ON NODE1 AND NODE2

[root@node1 ~]# mkdir -p /u01/app/grid/19.3.0/gridhome_1

[root@node1 ~]# mkdir -p /u01/app/grid/gridbase/

[root@node1 ~]# mkdir - p /u01/app/oracle/database/19.3.0/dbhome_1

[root@node1 ~]# chown -R oracle:oinstall /u01/

[root@node1 ~]# chown -R grid:oinstall /u01/app/grid

[root@node1 ~]# chmod -R 775 /u01/

[root@node1 ~]#

[root@node2 ~]# mkdir -p /u01/app/grid/19.3.0/gridhome_1

[root@node2 ~]# mkdir -p /u01/app/grid/gridbase/

[root@node2 ~]# mkdir -p /u01/app/oracle/database/19.3.0/dbhome_1

[root@node2 ~]# chown -R oracle:oinstall / u01/

[root@node2 ~]# chown -R grid:oinstall /u01/app/grid

[root@node2 ~]# chmod -R 775 /u01/

[root@node2 ~]#

UPDATE ORACLE & GRID USERS PROFILE ON NODE1 AND NODE2

[root@node1 ~]# vi /home/oracle/.bash_profile

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_HOSTNAME=node1. serhatcelik.local

export ORACLE_UNQNAME=SERHAT19C export ORACLE_BASE= /

u01/app/oracle/database/19.3.0/

export DB_HOME=$ORACLE_BASE/dbhome_1

export ORACLE_HOME=$DB_HOME

export ORACLE_SID= export ORACLE_SID TERM TERM1 export ORACLE_SID/export TERM TERM1 :/usr/local/bin:$PATH export PATH=$ORACLE_HOME/bin:$PATH export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib export CLASSPATH=$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

[root@node1 ~]# vi /home/grid/.bash_profile

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_HOSTNAME=node1. serhatcelik.local

export ORACLE_BASE=/u01/app/grid/gridbase/

export ORACLE_HOME=/u01/app/grid/19.3.0/gridhome_1

export GRID_BASE=/u01/app/grid/gridbase/

export GRID_HOME=/u01/app/ grid/19.3.0/gridhome_1

export ORACLE_SID=+ASM1

export ORACLE_TERM=xterm

export PATH=/usr/sbin:/usr/local/bin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib :/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

[root@node2 ~]# vi /home/oracle/.bash_profile

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_HOSTNAME=node2. serhatcelik.local

export ORACLE_UNQNAME=SERHAT19C export ORACLE_BASE= /

u01/app/oracle/database/19.3.0/

export DB_HOME=$ORACLE_BASE/dbhome_1

export ORACLE_HOME=$DB_HOME

export ORACLE_SID=export ORACLE_SID TERMX/ export TERM TERM TERM2= exportSERHAT19C :/usr/local/bin:$PATH export PATH=$ORACLE_HOME/bin:$PATH export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib export CLASSPATH=$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

[root@node2 ~]# vi /home/grid/.bash_profile

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_HOSTNAME=node2. serhatcelik.local

export ORACLE_BASE=/u01/app/grid/gridbase/

export ORACLE_HOME=/u01/app/grid/19.3.0/gridhome_1

export GRID_BASE=/u01/app/grid/gridbase/

export GRID_HOME=/u01/app/ grid/19.3.0/gridhome_1

export ORACLE_SID=+ASM2

export ORACLE_TERM=xterm

export PATH=/usr/sbin:/usr/local/bin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib :/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

UPDATE /etc/hosts FILES ON NODE1 AND NODE2

[root@node1 ~]# vi /etc/hosts

10.5.0.100 node1 node1. serhatcelik.local

10.5.0.101 node2 node2. serhatcelik.local

10.5.0.102 node1-vip node1-vip.serhatcelik.local

10.5.0.103 node2-vip node2-vip.serhatcelik.local

#10.5.0.104 srhtdb-scan srhtdb-scan.serhatcelik.local

#10.5.0.105 srhtdb-scan srhtdb-scan.serhatcelik.local

#10.5.0.106 srhtdb-scan srhtdb-scan.serhatcelik.local

10.5.0.107 node1-priv node1-priv.serhatcelik.local

10.5.0.108 node2-priv node2-priv.serhatcelik.local

[root@node2 ~]# vi /etc/hosts

10.5.0.100 node1 node1. serhatcelik.local

10.5.0.101 node2 node2. serhatcelik.local

10.5.0.102 node1-vip node1-vip.serhatcelik.local

10.5.0.103 node2-vip node2-vip.serhatcelik.local

#10.5.0.104 srhtdb-scan srhtdb-scan.serhatcelik.local

#10.5.0.105 srhtdb-scan srhtdb-scan.serhatcelik.local

#10.5.0.106 srhtdb-scan srhtdb-scan.serhatcelik.local

10.5.0.107 node1-priv node1-priv.serhatcelik.local

10.5.0.108 node2-priv node2-priv.serhatcelik.local

DNS SETTINGS ON NODE1 AND NODE2

DNS is needed for RAC installation. It is another prerequisite.

Edit /etc/resolv.conf File Like Below

[root@node1 ~]# more /etc/resolv.conf

nameserver 127.0.0.1

search serhatcelik.local

[root@node1 ~]#

Test DNS

[root@node1 ~]# nslookup srhtdb-scan

Server: 127.0.0.1

Address: 127.0.0.1#53

Name: srhtdb-scan.serhatcelik.local

Address: 10.5.0.106

Name: srhtdb-scan.serhatcelik.local

Address: 10.5. 0.104

Name: srhtdb-scan.serhatcelik.local

Address: 10.5.0.105

Name: srhtdb-scan.serhatcelik.local

Address: 10.5.0.106

Name: srhtdb-scan.serhatcelik.local

Address: 10.5.0.104

Name: srhtdb-scan.serhatcelik .local

Address: 10.5.0.105

NTP CONFIGURATION ON NODE1 AND NODE2

NTP is also needed for RAC installation. It is another prerequisite.

Apply below steps on NODE-1 and NODE-2.

Specify Your NTP Server Address

Chronyd is a better choice for most networks than ntpd for keeping computers synchronized with the Network Time Protocol

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

#pool 2.pool.ntp.org iburst

server 172.17.136.10 --------- add this IP (ORAAM IP ADDRESS)

[root@node1 ~]# sudo systemctl stop chronyd

[root@node1 ~]#

[root@node1 ~]#

[root@node1 ~]# sudo systemctl start chronyd

[root@node1 ~]#

[root@node1 ~]# timedatectl status

Local time: Thu 2022-02-17 14:58:05 CET

Universal time: Thu 2022-02-17 13:58:05 UTC

RTC time: Thu 2022-02-17 13:58:06

Time zone: Europe/Berlin (CET, +0100)

System clock synchronized: yes

NTP service: active

RTC in local TZ: no

[root@node1 ~]#

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

#pool 2.pool.ntp.org iburst

server 172.17.136.10 --------- add this IP (ORAAM IP ADDRESS)

[root@node2 ~]# sudo systemctl stop chronyd

[root@node2 ~]#

[root@node2 ~]#

[root@node2 ~]# sudo systemctl start chronyd

[root@node2 ~]#

[root@node2 ~]# timedatectl status

Local time: Thu 2022-02-17 14:58:05 CET

Universal time: Thu 2022-02-17 13:58:05 UTC

RTC time: Thu 2022-02-17 13:58:06

Time zone: Europe/Berlin (CET, +0100)

System clock synchronized: yes

NTP service: active

RTC in local TZ: no

[root@node2 ~]#

Step By Step Oracle 19C RAC Installation on Oracle Linux Part-2 GRID

2. PREPARING AND SETUP OF GRID ENVIRONMENT

We need to shared disc for RAC installation. Firstly, I have created below volume on my Dell Storage. Secondly, Server Cluster is created consists of Node1 and Node2.

Lastly, test volume is mapped to Server Cluster using ISCSI. You should get help from your Linux System Admin / Storage Admin. I did all operations myself because storage and linux servers are managed by me

CHECK DISKS ON NODE1 AND NODE2

[root@node1 ~]# fdisk -l | grep /dev/mapper/asm

[root@node1 ]# fdisk -l|grep /dev/mapper/asm

Disk /dev/mapper/asmvg01-ASM_New_1: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg03-ASM_New_3: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg06-ASM_New_6: 200 GiB, 214748364800 bytes, 419430400 sectors

Disk /dev/mapper/asmvg04-ASM_New_4: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg05-ASM_New_5: 200 GiB, 214748364800 bytes, 419430400 sectors

Disk /dev/mapper/asmvg02-ASM_New_2: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg07-ASM_New_7: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk /dev/mapper/asmvg07-ASM_New_8: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk /dev/mapper/asmvg07-ASM_New_9: 10 GiB, 10737418240 bytes, 20971520 sectors

[root@node2 ~]# fdisk -l | grep /dev/mapper/asm

Disk /dev/mapper/asmvg01-ASM_New_1: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg03-ASM_New_3: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg06-ASM_New_6: 200 GiB, 214748364800 bytes, 419430400 sectors

Disk /dev/mapper/asmvg04-ASM_New_4: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg05-ASM_New_5: 200 GiB, 214748364800 bytes, 419430400 sectors

Disk /dev/mapper/asmvg02-ASM_New_2: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg07-ASM_New_7: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk /dev/mapper/asmvg07-ASM_New_8: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk /dev/mapper/asmvg07-ASM_New_9: 10 GiB, 10737418240 bytes, 20971520 sectors

!!! APPLY BELOW STEPS ONLY ON NODE1 !!!

NO OPERATION MUST BE DONE ON NODE2 !!!

!!! DISCS IN THE DISC GROUP WE WILL CREATE FOR ASM SHOULD BE THE SAME SIZE !!!

!!! FURTHER, OUR DISCS MUST BE UNFORMATTED AND UNUSED !!!

DISC PARTION PROCESS IS DONE ONLY NODE1

How many discs will be used, the following operations are done for all discs.

[root@node1 ~]# fdisk /dev/sda

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0x521f0f8c.

The device presents a logical sector size that is smaller than

the physical sector size. Aligning to a physical sector (or optimal

I/O) size boundary is recommended, or performance may be impacted.

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1): 1

First sector (4096-209715199, default 4096):

Using default value 4096

Last sector, +sectors or +size{K,M,G} (4096-209715199, default 209715199):

Using default value 209715199

Partition 1 of type Linux and of size 100 GiB is set

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

WE ARE RESTARTING NODE1 AND NODE2 SERVERS.

IN THE NEXT STEP, WE WILL PREPARE OUR DISCS FOR ASM.

The ASMLib is highly recommended

for those systems that will be using ASM for shared storage within the cluster

due to the performance and manageability benefits that it provides. Perform the

following steps to install and configure ASMLib on the cluster nodes:

NOTE: ASMLib automatically provides

LUN persistence, so when using ASMLib there is no need to manually configure

LUN persistence for the ASM devices on the system.

Download the following packages

from the ASMLib OTN page, if you are an Enterprise Linux customer you can

obtain the software through the Unbreakable Linux network.

NOTE: The ASMLib kernel driver MUST match the kernel revision

number, the kernel revision number of your system can be identified by running

the "uname -r" command. Also, be sure to download the set of RPMs

which pertain to your platform architecture, in our case this is x86_64.

Enter the following command to determine the kernel version and

architecture of the system:

Copy

# uname -rm

Depending on your operating system version,

download the required Oracle Automatic Storage Management library driver

packages and driver:

http://www.oracle.com/technetwork/server-storage/linux/asmlib/index-101839.html

See Also:

My Oracle Support note

1089399.1 for information about Oracle ASMLIB support with Red Hat

distributions:

https://support.oracle.com/rs?type=doc&id=1089399.1

ORACLE ASM ON NODE1 AND NODE2

CVU DISK PACKAGE :-

[root@node1 rpm]#

[root@node1 rpm]#

[root@node1 rpm]# CVUQDISK_GRP=oinstall; export CVUQDISK_GRP

[root@node1 rpm]#

[root@node1 rpm]#

[root@node1 rpm]# ls -lrt

total 12

-rw-r--r--. 1 grid oinstall 11412 Mar 13 2019 cvuqdisk-1.0.10-1.rpm

[root@node1 rpm]#

[root@node1 rpm]# rpm -iv cvuqdisk-1.0.10-1.rpm

Verifying packages...

Preparing packages...

cvuqdisk-1.0.10-1.x86_64

[root@node1 rpm]#

[root@node1 rpm]#

[root@node1 rpm]# rpm -qi cvuqdisk

Name : cvuqdisk

Version : 1.0.10

Release : 1

Architecture: x86_64

Install Date: Mon 21 Feb 2022 02:07:27 PM CET

Group : none

Size : 22920

License : Oracle Corp.

Signature : (none)

Source RPM : cvuqdisk-1.0.10-1.src.rpm

Build Date : Wed 13 Mar 2019 10:25:43 AM CET

Build Host : rpm-build-host

Relocations : (not relocatable)

Vendor : Oracle Corp.

Summary : RPM file for cvuqdisk

Description :

This package contains the cvuqdisk program required by CVU.

cvuqdisk is a binary that assists CVU in finding scsi disks.

To install this package, you must first become 'root' and then set the

environment variable 'CVUQDISK_GRP' to the group that will own cvuqdisk.

If the CVUQDISK_GRP is not set, by default "oinstall" will be the owner group

of cvuqdisk.

Note:- On node 2 copy package (cvuqdisk) and install the same

ORACLEASM CONFIGURATION ON NODE1 AND NODE2

[root@node1 ~]# oracleasm configure -I

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle ASM library

driver. The following questions will determine whether the driver is

loaded on boot and what permissions it will have. The current values

will be shown in brackets (‘[]’). Hitting without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface []: grid

Default group to own the driver interface []: asmadmin

Start Oracle ASM library driver on boot (y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n) [y]: y

The next two configuration options take substrings to match device names.

The substring “sd” (without the quotes), for example, matches “sda”, “sdb”,

etc. You may enter more than one substring pattern, separated by spaces.

The special string “none” (again, without the quotes) will clear the value.

Device order to scan for ASM disks []:

Devices to exclude from scanning []:

Directories to scan []:

Use device logical block size for ASM (y/n) [n]: y

Writing Oracle ASM library driver configuration: done

[root@node2 ~]# oracleasm configure -I

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle ASM library

driver. The following questions will determine whether the driver is

loaded on boot and what permissions it will have. The current values

will be shown in brackets (‘[]’). Hitting without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface []: grid

Default group to own the driver interface []: asmadmin

Start Oracle ASM library driver on boot (y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n) [y]: y

The next two configuration options take substrings to match device names.

The substring “sd” (without the quotes), for example, matches “sda”, “sdb”,

etc. You may enter more than one substring pattern, separated by spaces.

The special string “none” (again, without the quotes) will clear the value.

Device order to scan for ASM disks []:

Devices to exclude from scanning []:

Directories to scan []:

Use device logical block size for ASM (y/n) [n]: y

Writing Oracle ASM library driver configuration: done

OUR DISCS ARE PREPARED IMMEDIATELY. WE WILL MAKE KERNEL ACTIVE AND SEALING WITH ORACLEASM INIT

ACTIVATE KERNEL ON NODE1 AND NODE2

[root@node1 ~]# oracleasm init

Creating /dev/oracleasm mount point: /dev/oracleasm

Loading module “oracleasm”: oracleasm

Configuring “oracleasm” to use device logical block size

Mounting ASMlib driver filesystem: /dev/oracleasm

[root@node1 ~]#

[root@node2 ~]# oracleasm init

Creating /dev/oracleasm mount point: /dev/oracleasm

Loading module “oracleasm”: oracleasm

Configuring “oracleasm” to use device logical block size

Mounting ASMlib driver filesystem: /dev/oracleasm

[root@node2 ~]#

Disk /dev/mapper/asmvg04-ASM_New_4: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg02-ASM_New_2: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg01-ASM_New_1: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg05-ASM_New_5: 200 GiB, 214748364800 bytes, 419430400 sectors

Disk /dev/mapper/asmvg06-ASM_New_6: 200 GiB, 214748364800 bytes, 419430400 sectors

Disk /dev/mapper/asmvg07-ASM_New_7: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk /dev/mapper/asmvg07-ASM_New_8: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk /dev/mapper/asmvg07-ASM_New_9: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk /dev/mapper/asmvg03-ASM_New_3: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg02-ASM_New_2: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg06-ASM_New_6: 200 GiB, 214748364800 bytes, 419430400 sectors

Disk /dev/mapper/asmvg04-ASM_New_4: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg01-ASM_New_1: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg03-ASM_New_3: 500 GiB, 536870912000 bytes, 1048576000 sectors

Disk /dev/mapper/asmvg07-ASM_New_7: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk /dev/mapper/asmvg07-ASM_New_8: 10 GiB, 10737418240 bytes, 20971520 sectors

Disk /dev/mapper/asmvg05-ASM_New_5: 200 GiB, 214748364800 bytes, 419430400 sectors

Disk /dev/mapper/asmvg07-ASM_New_9: 10 GiB, 10737418240 bytes, 20971520 sectors

!!! WE WILL STAMP THE DISKS.

WE DO THIS OPERATION ONLY ON NODE1 !!!

How many discs are given, the following operations are done for all discs.

We are going to create OCR and VOTE Disk for clusterware -

Normal Redundancy - Three Disks

NODE1:-

root@node1~]# oracleasm createdisk OCR_VD1 /dev/mapper/asmvg07-ASM_NEW_7

OCR_VD1

[root@node1 ~]#

[root@node1 ~]# oracleasm scandisks

Reloading disk partitions: done

Cleaning any stale ASM disks...

Scanning system for ASM disks...

[root@node1 ~]#

[root@node1 ~]# oracleasm listdisks

OCR_VD1

[root@node1 ~]#

[root@node1 ~]# oracleasm createdisk OCR_VD2 /dev/mapper/asmvg07-ASM_New_8

Writing disk header: done

Instantiating disk: done

[root@node1 ~]#

[root@node1 ~]# oracleasm createdisk OCR_VD3 /dev/mapper/asmvg07-ASM_New_9

Writing disk header: done

Instantiating disk: done

[root@node1 ~]#

[root@node1 ~]# oracleasm listdisks

OCR_VD1

OCR_VD2

OCR_VD3

NODE2:-

OCR_VD1

[root@node2 ~]#

[root@node2 ~]# oracleasm listdisks

OCR_VD1

[root@node2 ~]#

[root@node2 ~]# oracleasm scandisks

Reloading disk partitions: done

Cleaning any stale ASM disks...

Scanning system for ASM disks...

Instantiating disk "OCR_VD2"

Instantiating disk "OCR_VD3"

[root@node2 ~]#

[root@node2 ~]#

[root@node2 ~]# oracleasm listdisks

OCR_VD1

OCR_VD2

OCR_VD3

CLUVFY UTILITY :-

The Cluster Verification Utility (CVU) performs system checks in preparation for installation, patch updates, or other system changes. Using CVU ensures that you have completed the required system configuration and preinstallation steps so that your Oracle Grid Infrastructure or Oracle Real Application Clusters (Oracle RAC) installation, update, or patch operation completes successfully.

Cluvfy utility software download link (30839369):-

Patch 30839369: Standalone CVU

version 19.11 April 2021

Note : After

rebooting the server all error resolved

OUR DISKS ARE READY. NOW WE CAN START SETUP.

FIRST, WE WILL INSTALL GRID

ORACLE DATABASE 19c GRID INFRASTRUCTURE (19.3) FOR LINUX X86-64 IS DOWNLOADED AND UPLOADED TO NODE1 SERVER

[root@node1 ~]# cd /u01/app/grid/19.3.0/gridhome_1

[root@node1 gridhome_1]#

[root@node1 gridhome_1]# ls -lrt

total 2821476

-rwxr–r– 1 root root 2889184573 Feb 14 00:13 LINUX.X64_193000_grid_home.zip

[root@node1 gridhome_1]#

[root@node1 gridhome_1]# chown grid:oinstall LINUX.X64_193000_grid_home.zip

[root@node1 gridhome_1]#

[root@node1 gridhome_1]# su – grid

[grid@node1 ~]$

[grid@node1 ~]$ cd /u01/app/grid/19.3.0/gridhome_1

[grid@node1 gridhome_1]$

[grid@node1 gridhome_1]$ unzip LINUX.X64_193000_grid_home.zip

[grid@node1 gridhome_1]$

[grid@node1 ~]$ cd /u01/app/grid/19.3.0/gridhome_1/cv/admin

grid@node1 admin]$ pwd

/u01/app/grid/19.3.0/gridhome_1/cv/admin

[grid@node1 admin]$ vi cvu_config

CV_ASSUME-DISTID=OEL8.1

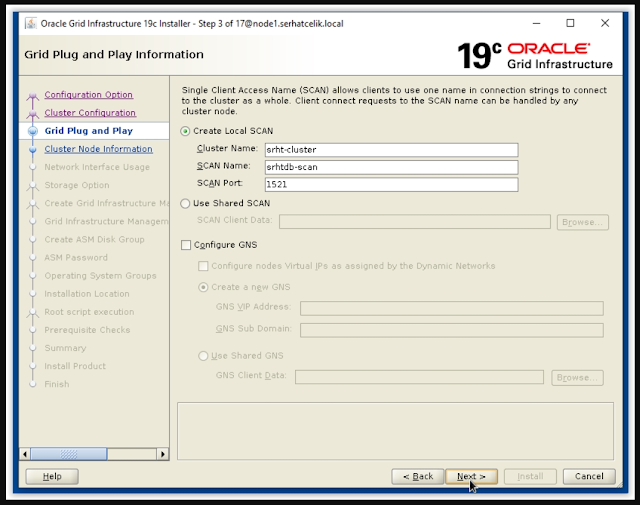

ORACLE AND GRID USERS ARE ADDED TO VISUDO

[root@node1 ~]# visudo

oracle ALL=(ALL) ALL

oracle ALL=NOPASSWD: ALL

grid ALL=(ALL) ALL

grid ALL=NOPASSWD: ALL

WE HAVE COME TO THE MOST CRITICAL POINT.

WE WILL START THE GRID SETUP.

THE MOST CONSIDERED SUBJECT HERE IS THE APPLICATION WE USE FOR SSH. I WAS USING THE ZOC APP AND THE SETUP SCREEN WAS NOT COMING DESPITE DISPLAY SETTINGS WERE DONE.

USING MOBAXTERM APPLICATION AS THE SOLUTION, I HAVE GIVEN THE FOLLOWING COMMANDS AND THE SETUP SCREEN WAS STARTED. THERE IS ANOTHER IMPORTANT POINT, YOU MUST PROVIDE THE IPS OF THE WINDOWS MACHINE YOU STARTED THE INSTALLATION.

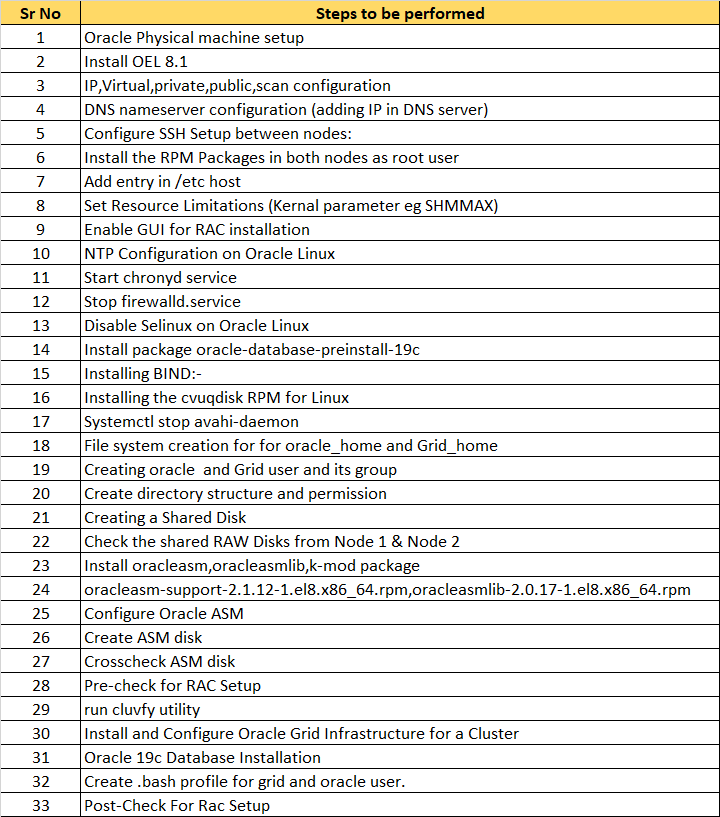

[root@node1 ~]# su – grid

Last login: Sun Feb 14 14:08:16 +03 2021 on pts/0

[grid@node1 ~]$

[grid@node1 ~]$ cd $GRID_HOME

[grid@node1 gridhome_1]$

[grid@node1 gridhome_1]$ sh gridSetup.sh

ERROR: Unable to verify the graphical display setup. This application requires X display. Make sure that xdpyinfo exist under PATH variable.No X11 DISPLAY variable was set, but this program performed an operation which requires it.

[grid@node1 gridhome_1]$

[grid@node1 gridhome_1]$ export DISPLAY=10.6.176.54:0.0

[grid@node1 gridhome_1]$

[grid@node1 gridhome_1]$ sh gridSetup.sh

Launching Oracle Grid Infrastructure Setup Wizard…

[grid@node1 gridhome_1]$

I WILL CONTINUE THE NEXT STEPS WITH THE SCREENSHOTS

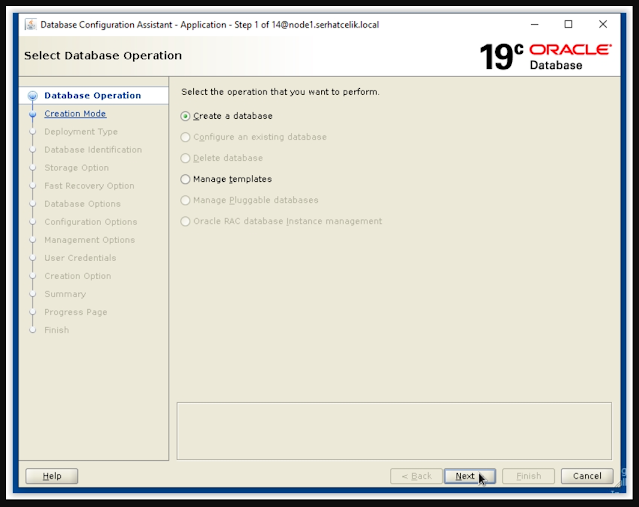

Step By Step Oracle 19C RAC Installation on Oracle Linux Part-3 DATABASE

3. INSTALL ORACLE DATABASE 19C CDB & PDB

3.1. INSTALLATION OF ORACLE DATABASE 19C SOFTWARE

ORACLE DATABASE 19C (19.3) FOR LINUX X86-64 IS DOWNLOADED AND UPLOADED TO NODE1 SERVER

[root@node1 ~]# su – oracle

Last login: Sat Feb 20 13:30:57 +03 2021

[oracle@node1 ~]$

[oracle@node1 ~]$ cd $ORACLE_HOME/

[oracle@node1 dbhome_1]$

[oracle@node1 dbhome_1]$ ls -lrt

-rwxr–r– 1 root root 3059705302 Feb 20 11:12 LINUX.X64_193000_db_home.zip

[oracle@node1 dbhome_1]$

[oracle@node1 dbhome_1]$ unzip LINUX.X64_193000_db_home.zip

[oracle@node1 dbhome_1]$

WE HAVE COME TO THE MOST CRITICAL POINT.

WE WILL START THE SETUP.

THE MOST CONSIDERED SUBJECT HERE IS THE APPLICATION WE USE FOR SSH. I WAS USING THE ZOC APP AND THE SETUP SCREEN WAS NOT COMING DESPITE DISPLAY SETTINGS WERE DONE.

USING MOBAXTERM APPLICATION AS THE SOLUTION, I HAVE GIVEN THE FOLLOWING COMMANDS AND THE SETUP SCREEN WAS STARTED. THERE IS ANOTHER IMPORTANT POINT, YOU MUST PROVIDE THE IP OF THE WINDOWS MACHINE YOU STARTED THE INSTALLATION.

I WILL CONTINUE THE NEXT STEPS WITH THE SCREENSHOTS

In order to get rid of above errors, apply below steps on Node1 and Node2.

CHANGE OPTIONS=”-g” as OPTIONS=”-x” then restart ntpd service.

[root@node1 /]# more /etc/sysconfig/ntpd

OPTIONS=”-x”

[root@node1 /]#

[root@node1 /]# systemctl restart ntpd.service

[root@node1 /]#

[root@node2 /]# more /etc/sysconfig/ntpd

OPTIONS=”-x”

[root@node2 /]#

[root@node2 /]# systemctl restart ntpd.service

[root@node2 /]#

When you press Check Again, normally failed status should be disappered, If the check does not work, cancel and start the installation again.

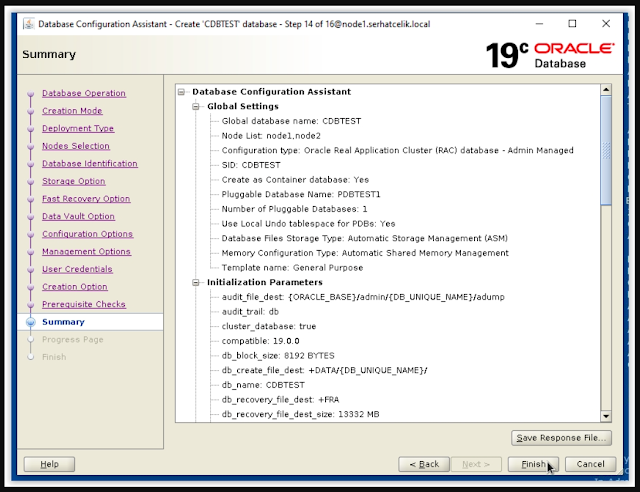

3.2. INSTALLATION ORACLE DATABASE 19C AS CDB & PDB

ADD BELOW ENTRIES TO ORACLE BASH PROFILE ON NODE1 AND NODE2

[root@node1 ~]# su – oracle

Last login: Sat Feb 20 17:58:50 +03 2021 on pts/0

[oracle@node1 ~]$ vi .bash_profile

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_HOSTNAME=node1.serhatcelik.local

export ORACLE_UNQNAME=CDBTEST

export ORACLE_SID=CDBTEST1

export ORACLE_BASE=/u01/app/oracle/database/19.3.0/

export DB_HOME=$ORACLE_BASE/dbhome_1

export ORACLE_HOME=$DB_HOME

export ORACLE_TERM=xterm

export PATH=/usr/sbin:/usr/local/bin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

[root@node2 ~]# su – oracle

Last login: Sat Feb 20 17:58:50 +03 2021 on pts/0

[oracle@node1 ~]$ vi .bash_profile

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_HOSTNAME=node1.serhatcelik.local

export ORACLE_UNQNAME=CDBTEST

export ORACLE_SID=CDBTEST2

export ORACLE_BASE=/u01/app/oracle/database/19.3.0/

export DB_HOME=$ORACLE_BASE/dbhome_1

export ORACLE_HOME=$DB_HOME

export ORACLE_TERM=xterm

export PATH=/usr/sbin:/usr/local/bin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

CREATING NEW DISC GROUP FOR FRA (IF YOU HAVE FRA, SKIP THIS STEP)

We need to shared disc for RAC installation. Firstly, I have created 150GB volume for fast recovery area on my Dell Storage. Secondly, Server Cluster is already created consists of Node1 and Node2 while installing grid. Lastly, fast recovery volume is mapped to Server Cluster using ISCSI. You should get help from your Linux System Admin / Storage Admin. I did all operations myself because storage and linux servers are managed by us.

CHECK DISKS ON NODE1 AND NODE2

Fast Recovery Area disc newly created seen below as 150GB. (100GB disc >>> DATA disc was created while installing grid.)

[root@node1 /]# multipath -ll

mpathb (36000d3100cfea6000000000000000025) dm-3 COMPELNT,Compellent Vol

size=100G features=’1 queue_if_no_path’ hwhandler=’0′ wp=rw-+- policy='service-time 0' prio=1 status=active |- 5:0:0:1 sda 8:0 active ready running |- 7:0:0:1 sdb 8:16 active ready running |- 8:0:0:1 sdd 8:48 active ready running – 9:0:0:1 sdc 8:32 active ready running

mpatha (36000d3100cfea6000000000000000026) dm-2 COMPELNT,Compellent Vol

size=150G features=’1 queue_if_no_path’ hwhandler=’0′ wp=rw-+- policy='service-time 0' prio=1 status=active |- 2:0:0:2 sde 8:64 active ready running |- 3:0:0:2 sdf 8:80 active ready running |- 4:0:0:2 sdg 8:96 active ready running – 6:0:0:2 sdh 8:112 active ready running

[root@node1 /]#

[root@node2 ~]# multipath -ll

mpathb (36000d3100cfea6000000000000000025) dm-3 COMPELNT,Compellent Vol

size=100G features=’1 queue_if_no_path’ hwhandler=’0′ wp=rw-+- policy='service-time 0' prio=1 status=active |- 5:0:0:1 sda 8:0 active ready running |- 7:0:0:1 sdc 8:32 active ready running |- 8:0:0:1 sdd 8:48 active ready running – 9:0:0:1 sdb 8:16 active ready running

mpatha (36000d3100cfea6000000000000000026) dm-2 COMPELNT,Compellent Vol

size=150G features=’1 queue_if_no_path’ hwhandler=’0′ wp=rw-+- policy='service-time 0' prio=1 status=active |- 2:0:0:2 sde 8:64 active ready running |- 3:0:0:2 sdf 8:80 active ready running |- 4:0:0:2 sdg 8:96 active ready running – 6:0:0:2 sdh 8:112 active ready running

[root@node2 ~]#

!!! APPLY BELOW STEPS ONLY ON NODE1 !!! NO OPERATION MUST BE DONE ON NODE2 !!!

!!! DISCS IN THE DISC GROUP WE WILL CREATE FOR ASM SHOULD BE THE SAME SIZE !!!

!!! FURTHER, OUR DISCS MUST BE UNFORMATTED AND UNUSED !!!

DISC PARTION PROCESS IS DONE ONLY NODE1

How many discs will be used, the following operations are done for all discs. I did it once as I only gave one disk.

[root@node1 /]# fdisk /dev/mapper/mpatha

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0xbb6f9348.

The device presents a logical sector size that is smaller than

the physical sector size. Aligning to a physical sector (or optimal

I/O) size boundary is recommended, or performance may be impacted.

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1): 1

First sector (4096-314572799, default 4096):

Using default value 4096

Last sector, +sectors or +size{K,M,G} (4096-314572799, default 314572799):

Using default value 314572799

Partition 1 of type Linux and of size 150 GiB is set

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

WARNING: Re-reading the partition table failed with error 22: Invalid argument.

The kernel still uses the old table. The new table will be used at

the next reboot or after you run partprobe(8) or kpartx(8)

Syncing disks.

[root@node1 /]#

!!! WE WILL STAMP THE DISCS. WE DO THIS OPERATION ONLY ON NODE1 !!!

How many discs are given, the following operations are done for all discs. I did it once as I only gave one disk.

[root@node1 /]# oracleasm createdisk fra1 /dev/mapper/mpatha

Writing disk header: done

Instantiating disk: done

[root@node1 /]#

CHECK ASM DISCS

[root@node1 /]# ll /dev/oracleasm/disks/

total 0

brw-rw—- 1 grid asmadmin 8, 17 Feb 20 20:45 DATA1

brw-rw—- 1 grid asmadmin 252, 2 Feb 20 20:45 FRA1

[root@node1 /]#

WE RUN THE FOLLOWING COMMAND IN ORDER TO SEE THE OUR TRANSACTION ON NODE2

[root@node2 ~]# oracleasm scandisks

Reloading disk partitions: done

Cleaning any stale ASM disks…

Scanning system for ASM disks…

Instantiating disk “FRA1”

[root@node2 ~]#

CREATE ASM DISC GROUP VIA ASMCA (AUTOMATIC STORAGE MANAGEMENT CONFIGURATION ASSISTANT)

No comments:

Post a Comment