1) MISSING LEADING EDGE OF INDEX

One of the first things you want to check when Oracle is not using an index is if you’ve included the leading edge.

That is the first column of the index in your where of clause.

If you don’t include the leading edge of the index as part your where of clause, Oracle will not use your index except in the special circumstance of an index skip scan operation.

So this is usually the first thing to check.

In above diagram, index is on

SQL> create index IX_APPLICATION_NAME applications(last_name, first_name);

SQL Query is

SQL> select application_id, first_name, last_name from application where first_name='Jason';

That's why, Index will not be used because in WHERE clause only first_name is used.

If you’re not using the first column in the index, you have two choices.

First, you can add that column into the where clause of your SQL statement if you know the value.

If you know the value, this is probably the best approach, because we always want to give Oracle as much information as possible so it can efficiently process our statement.

Our other option is to consider reordering the columns in our index, or adding a new index that has the columns we do have in our SQL statement at the front of the index.

If the SQL statement we are evaluating is representative of what columns will be included in the where clause most of the time. This is probably the best option to investigate.

Our goal is to have the columns that are included in our where clause most frequently at the front part of the index.

And any column that is always included with the where clause should be the first column in the index. Because if this first column is not present in our statement, Oracle won’t use the index.

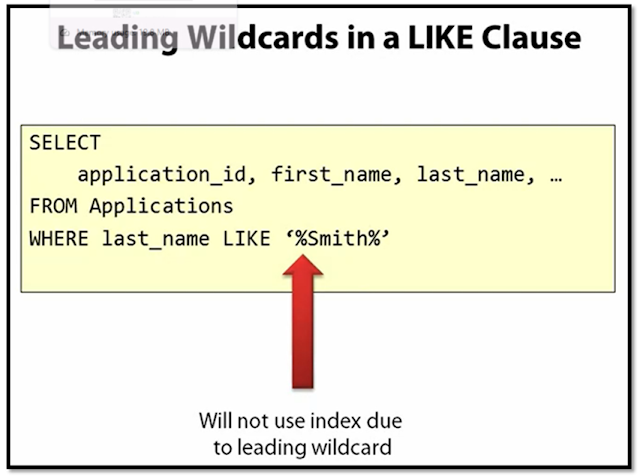

3) USING A LIKE CLAUSE AND A LEADING WILDCARD

There are times when someone decides to wrap the value that they are searching for in both leading and trailing wildcard characters as shown on the slide.

Usually the reason for doing this is because they are searching for a string which is not at the start of the value in the column.

Or perhaps they’re trying to do some sort of keyword search against the text contained in the column.

In this case, the trailing wildcard is not a problem.

The issue is the leading wildcard character.

Whenever we have a leading wildcard carrier, like we see here, Oracle will not use an index on that column.

Recall the data in an index is in sorted order.

Just like in a telephone book, it doesn’t help you very much if you know the second letter in someone’s last name, but you don’t know the first character.

The telephone book, like an Oracle index, is predicated on the fact that you know information not just from the leading edge of the index but from the beginning of the item you are searching for. Otherwise the tree structure of the index doesn’t do us much good.

FUNCTION IN THE WHERE CLAUSE

One of the least understood aspects of indexes.

Is that if you include a function on a column in your where clause.

And Oracle won’t be able to use any standard indexes on that column.

Note this applies to using a function on the column in the WHERE clause, not the value itself.

When you include a function on a column, what Oracle has to do is to read each row out of the table, compute the value from the function, and then perform a comparison to the value provided.

Because what you’re asking Oracle to do is to find all the roads that match the computed value from the function, not the actually value stored in the table and in the index.

Too often, someone is trying to fix a functional problem, like needing to perform a case insensitive search. Or searching for some string that’s embedded into another field with a substring function.

Consequently, they become focused on the functional aspects of the problem and forget about the performance aspects.

But when you use a function in this way, Oracle won’t be able to use any of the regular indexes on the table.

One solution to this problem is to create a function-based index over the computed value.

If you’re doing something like a case-insensitive search or phonetic search, this should be your first option.

Function-based indexes work very well, and they’re a proven solution to these problems.

Just make sure that the function you use in your WHERE clause matches the function used in your create index statement exactly.

Otherwise Oracle won’t be able to match up your statement with the function based index.

The other types of functions I frequently see used in WHERE clauses are functions to do some sort of data cleanup or data conversion.

These are functions like trim, to_date, to convert a date stored as text into a date data type, or substring, to extract out part of our string for comparison.

In each of these cases, you could use a function-based index and this would work.

But you may want to ask yourself a deeper question in each of these cases. And that is, is the data modeled correctly?

If you have leading and trailing white spaces, why is that not being cleaned up at the front end when the data is inserted?

If a data store has a large, hard data type, why is this?

Why isn’t that date being stored as a date data type. And if you’re constantly using substring to extract a value out of a larger field maybe that field should be split into two fields.

In each of these cases there very well could be a performance problem.

But this performance problem is most likely just a symptom of a larger data modeling problem. And that is probably causing pain in other areas of your application as well.

DATA TYPE CONVERSION IN THE WHERE CLAUSE

Let’s imagine for a moment that we have a definition of a table like is shown on the below.

And now we run a query like the query shown at the bottom of the slide against this table.

What is important to know is that in the table the course number column is defined as a VARCHAR2 data type.

So a data type that’s intended for string values.

But in our query we have specified the course number just as a number.

In this case the number 101.

So what we have here is a mismatch between the data type and the table and the SQL statement.

Now Oracle will not error, it will run the statement for you just fine.

But it will not use any indexes that have been built over the course number of column due to this implicit data conversion that’s going on.

The solution to this problem is very easy.

You want to make sure that the data types you use in your SQL statements always match up with the data types defined in the table.

This is more of an issue of attention to detail rather than anything else. But you do need to be mindful of these data types.

Otherwise your index may not get used, and the performance of your statement will be adversely affected.

Also when you use bind variables in your application it is best to explicitly specify the data type that bind variables should use.

If you don’t Oracle will do the best job it can to infer the data type from the value you’ve provided and the column type in the table.

And almost always Oracle infers correctly. But Oracle can make an incorrect guess.

And so I always make sure to specify the data type directly and then I avoid this problem altogether.

Finally, it is important to pay attention to the data types of columns in your tables as you define these tables in your schema, while this is more of a data modeling issue than a performance issue.

Choosing the incorrect data type can cause a lot of problems down the road, including both performance problems and just making your database scheme more difficult to use.

Most people expect dates to be stored as a date or time stamp data type, number value to be stored as numbers and so on.

When you start deviating from these conventions is where you get into trouble.

Because someone will make an assumption about a column type that isn’t true.

So put some thought into what data types your columns should be, and you’ll save yourself a lot of trouble going forward.

OUTDATED DATABASE STATISTICS

When the Oracle Optimizer evaluates your SQL statement and generates an execution plan.

It uses the database statistics for all the tables and indexes involved with the statement to determine the most efficient execution plan.

If these statistics are out of date or do not reflect the current state of the database objects, then it is likely that the optimizer will generate a suboptimal execution plan.

For example, if the statistics indicate that the database table is very small in size, say just 1, 000 rows.

Then Oracle will assume it is efficient to perform a full table scan of the table due to the small size.

In reality, if the table is very large, you’d want to use an index operation.

Because Oracle has incorrect information about the table, it’s going to make a bad choice and not use an index in this situation.

Typically, in production environments, your DBA will set up a recurring job that will keep database stats up to date, so the situation occurs less frequently.

If you are in a test environment though, things may be less automated.

So you want to be conscious of how current your database statistics are. Stats can also be out of date if you’ve just done a bulk load or bulk deletion of data.

Since these operations can significantly change the amount of data and the distribution of data in a table.

And, sometimes, after you create a new index, Oracles doesn’t seem to have very good information about that index.

So it may not use that index in all the situations it should.

Technically, from Oracles 10g onward, you’re not supposed to have to regather stats after creating a new index. But I’ve personally seen some situations where I’ve got some strange results. So I’ve gotten into the practice of gathering stats after creating a new index. Just to be on the safe side.

If you are concerned about your stats being out of date, the solution to get up to date stats is very simple.

You just want to use the DBMS stats package.

You gather your stats on a table as shown in the top item and this will cascade down to all indexes on the table as well.

If the table is particularly large and you just want to sample a percentage of the rows, this can be done as well to speed up the gathering stats process.

If you want to gather stats on every object in a schema, you can do that with the syntax at the bottom of the slide.

You prepare, though, that if we have a large schema with a lot of tables, this could take considerable time.

Whatever route you choose though, having up-to-date statistics will allow the Oracle Optimizer to make the most informed decisions about how it should execute your SQL statement. And many times, out-of-date stats are the reason why Oracle will seemingly refuse to use an index.

No comments:

Post a Comment